# A brief intro to using {pins} to store, version, share and protect a dataset

# on Posit Connect. Documentation: https://pins.rstudio.com/

# Setup -------------------------------------------------------------------

install.packages(c("pins", "dplyr")) # if not yet installed

suppressPackageStartupMessages({

library(pins)

library(dplyr) # for wrangling and the 'starwars' demo dataset

})

board <- board_connect() # will error if you haven't authenticated before

# Error in `check_auth()`: ! auth = `auto` has failed to find a way to authenticate:

# • `server` and `key` not provided for `auth = 'manual'`

# • Can't find CONNECT_SERVER and CONNECT_API_KEY envvars for `auth = 'envvar'`

# • rsconnect package not installed for `auth = 'rsconnect'`

# Run `rlang::last_trace()` to see where the error occurred.

# To authenticate

# In RStudio: Tools > Global Options > Publishing > Connect... > Posit Connect

# public URL of the Strategy Unit Posit Connect Server: connect.strategyunitwm.nhs.uk

# Your browser will open to the Posit Connect web page and you're prompted to

# for your password. Enter it and you'll be authenticated.

# Once authenticated

board <- board_connect()

# Connecting to Posit Connect 2024.03.0 at

# <https://connect.strategyunitwm.nhs.uk>

board |> pin_list() # see all the pins on that board

# Create a pin ------------------------------------------------------------

# Write a dataset to the board as a pin

board |> pin_write(

x = starwars,

name = "starwars_demo"

)

# Guessing `type = 'rds'`

# Writing to pin 'matt.dray/starwars_demo'

board |> pin_exists("starwars_demo")

# ! Use a fully specified name including user name: "matt.dray/starwars_demo",

# not "starwars_demo".

# [1] TRUE

pin_name <- "matt.dray/starwars_demo"

board |> pin_exists(pin_name) # logical, TRUE/FALSE

board |> pin_meta(pin_name) # metadata, see also 'metadata' arg in pin_write()

board |> pin_browse(pin_name) # view the pin in the browser

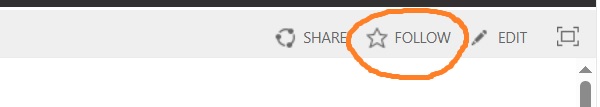

# Permissions -------------------------------------------------------------

# You can let people see and edit a pin. Log into Posit Connect and select the

# pin under 'Content'. In the 'Settings' panel on the right-hand side, adjust

# the 'sharing' options in the 'Access' tab.

# Overwrite and version ---------------------------------------------------

starwars_droids <- starwars |>

filter(species == "Droid") # beep boop

board |> pin_write(

starwars_droids,

pin_name,

type = "rds"

)

# Writing to pin 'matt.dray/starwars_demo'

board |> pin_versions(pin_name) # see version history

board |> pin_versions_prune(pin_name, n = 1) # remove history

board |> pin_versions(pin_name)

# What if you try to overwrite the data but it hasn't changed?

board |> pin_write(

starwars_droids,

pin_name,

type = "rds"

)

# ! The hash of pin "matt.dray/starwars_demo" has not changed.

# • Your pin will not be stored.

# Use the pin -------------------------------------------------------------

# You can read a pin to your local machine, or access it from a Quarto file

# or Shiny app hosted on Connect, for example. If the output and the pin are

# both on Connect, no authentication is required; the board is defaulted to

# the Posit Connect instance where they're both hosted.

board |>

pin_read(pin_name) |> # like you would use e.g. read_csv

with(data = _, plot(mass, height)) # wow!

# Delete pin --------------------------------------------------------------

board |> pin_exists(pin_name) # logical, good function for error handling

board |> pin_delete(pin_name)

board |> pin_exists(pin_name)Storing data safely

UPDATED: Please see the Addendum to this blog, added 2025-04-03 regarding accessing data from SharePoint

Coffee & Coding

In a recent Coffee & Coding session we chatted about storing data safely for use in Reproducible Analytical Pipelines (RAP), and the slides from the presentation are now available. We discussed the use of Posit Connect Pins and Azure Storage.

In order to avoid duplication, this blog post will not cover the pros and cons of each approach, and will instead focus on documenting the code that was used in our live demonstrations. I would recommend that you look through the slides before using the code in this blogpost and have them alongside, as they provide lots of useful context!

Posit Connect Pins

Azure Storage in R

You will need an .Renviron file with the four environment variables listed below for the code to work. This .Renviron file should be ignored by git. You can share the contents of .Renviron files with other team members via Teams, email, or Sharepoint.

Below is a sample .Renviron file

AZ_STORAGE_EP=https://STORAGEACCOUNT.blob.core.windows.net/

AZ_STORAGE_CONTAINER=container-name

AZ_TENANT_ID=long-sequence-of-numbers-and-letters

AZ_APP_ID=another-long-sequence-of-numbers-and-lettersinstall.packages(c("AzureAuth", "AzureStor", "arrow")) # if not yet installed

# Load all environment variables

ep_uri <- Sys.getenv("AZ_STORAGE_EP")

app_id <- Sys.getenv("AZ_APP_ID")

container_name <- Sys.getenv("AZ_STORAGE_CONTAINER")

tenant <- Sys.getenv("AZ_TENANT_ID")

# Authenticate

token <- AzureAuth::get_azure_token(

"https://storage.azure.com",

tenant = tenant,

app = app_id,

auth_type = "device_code",

)

# If you have not authenticated before, you will be taken to an external page to

# authenticate!Use your mlcsu.nhs.uk account.

# Connect to container

endpoint <- AzureStor::blob_endpoint(ep_uri, token = token)

container <- AzureStor::storage_container(endpoint, container_name)

# List files in container

blob_list <- AzureStor::list_blobs(container)

# If you get a 403 error when trying to interact with the container, you may

# have to clear your Azure token and re-authenticate using a different browser.

# Use AzureAuth::clean_token_directory() to clear your token, then repeat the

# AzureAuth::get_azure_token() step above.

# Upload specific file to container

AzureStor::storage_upload(container, "data/ronald.jpeg", "newdir/ronald.jpeg")

# Upload contents of a local directory to container

AzureStor::storage_multiupload(container, "data/*", "newdir")

# Check files have uploaded

blob_list <- AzureStor::list_blobs(container)

# Load file directly from Azure container

df_from_azure <- AzureStor::storage_read_csv(

container,

"newdir/cats.csv",

show_col_types = FALSE

)

# Load file directly from Azure container (by temporarily downloading file

# and storing it in memory)

parquet_in_memory <- AzureStor::storage_download(

container,

src = "newdir/cats.parquet", dest = NULL

)

parq_df <- arrow::read_parquet(parquet_in_memory)

# Delete from Azure container (!!!)

for (blobfile in blob_list$name) {

AzureStor::delete_storage_file(container, blobfile)

}Azure Storage in Python

This will use the same environment variables as the R version, just stored in a .env file instead.

We didn’t cover this in the presentation, so it’s not in the slides, but the code should be self-explanatory.