Large Language Models (LLMs): Is this AI?

Data Science team, Strategy Unit

Oct 10, 2024

Generative AI ✨

- Creates new content

- Trained on lots of examples

- Can mimic creativity

Modalities 🖼️

- Images (e.g. DALL-E)

- Audio/video (e.g. inVideo AI)

- Text (e.g. ChatGPT)

Text 🔤

- Spam filters

- Sentiment

- Topic detection

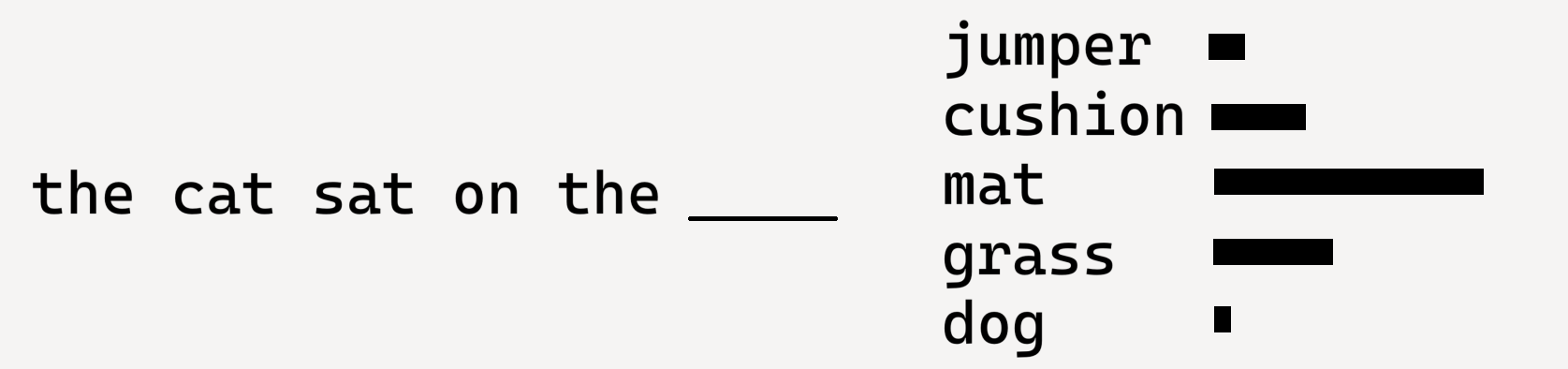

- Word prediction

- …

Large Language Models (LLMs) 🦜

- A (fancy?) parrot

- Learns from lots of text

- Predicts next word

In healthcare 🏥

- Organise medical documents

- Drug discovery research

- Chatbots

- …

IRL models 🤖

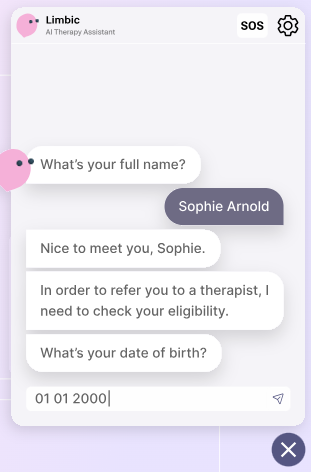

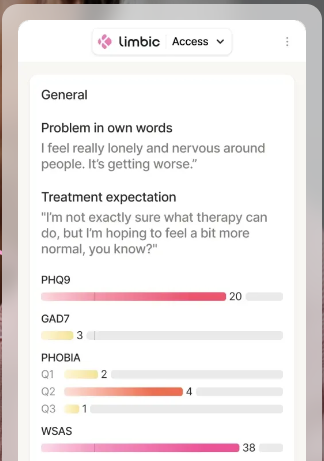

Case study 🧠

- NHS: chatbot for mental health referrals

- Used Limbic ‘Access’ ‘e-triage’ chatbot

- According to Limbic:

‘Nearly 40% of NHS Talking Therapies already trust Limbic to improve their services’

Case study 🧠

Patient

Clinician

- From the NHS-E Transformation Directorate write-up:

- ~99% of patients that left feedback said that Limbic was helpful

- the service has seen a 30% increase in referrals and initial evidence indicates that Limbic improved out of hours access

- on a pro-rata basis, a saving of 3000 hours (4 psychological wellbeing practitioners)

- nearly 20% of referrals were identified as ineligible and signposted to a more appropriate service

But… ⚠️

The effect of using a large language model to respond to patient messages (The Lancet)

…raises the question of the extent to which LLM assistance is decision support versus LLM-based decision making

…a minority of LLM drafts, if left unedited, could lead to severe harm or death

Pros ➕

- For providers: could reduce pressure

- For users: increases service accessibility

- Can be trained for domain specificity

Cons ➖

- Ethical issues, like:

- bias

- computational cost

- data origins

- privacy

- Not human

- It lies

Consider 🤔

- Are there legal issues?

- Have you considered user needs?

- Should you follow policies (e.g. HM gov, NHS, trust)?

To ponder ❓

- Is this AI?

- Are LLMs an appropriate tool in healthcare?

- How might you feel interacting with an LLM-driven service?

- How can we protect patient privacy?

- How do we deal with LLMs as tools for decision support vs decision making?

- Who is responsible for errors, or even death?

Further reading 📚

- NHS Knowledge and Library Services: AI

- 3 Blue 1 Brown (YouTube)

- Computerphile (YouTube)

- GOV.UK chatbot experiment

view slides at the-strategy-unit.github.io/data_science/presentations