pxtextmining API: enabling access to project outputs

Our latest major project milestone is the release of a model API. This blog post will explain what this is, how we have achieved it, and what our next steps are.

What is an API?

API stands for “application programming interface”. Most of the digital services and platforms that we use have an API. It is essentially a gateway that allows access to information. For example, when you ask an Alexa or Siri what the weather is, it will communicate with a weather service API to obtain the information for you. When you get an email notification on your phone, this is because your email service provider is sending a notice via its API, to your phone.

Still confused? This video may help.

The pxtextmining API allows people to utilise the machine learning models that we have trained for the project to label new text. It makes the model accessible over the internet, without any installation or downloads required. All that’s needed is a few lines of code. Examples are available on the pxtextmining documentation website.

Why did we do it?

The API was created to separate our R/Shiny-based experiencesdashboard frontend and the Python-based pxtextmining backend. This independence means more flexibility in the development of each. This also means that some participating trusts can utilise the model only, if they wish, and integrate it into their existing visualisation tools/dashboards for exploring patient feedback data.

We also recognise that although the code and models for pxtextmining are available openly on github, many organisations may not have the technical resources, time, or capability to run it themselves. Making the model available via an API reduces the barrier to entry and ensures that the project outputs are more accessible.

How did we do it?

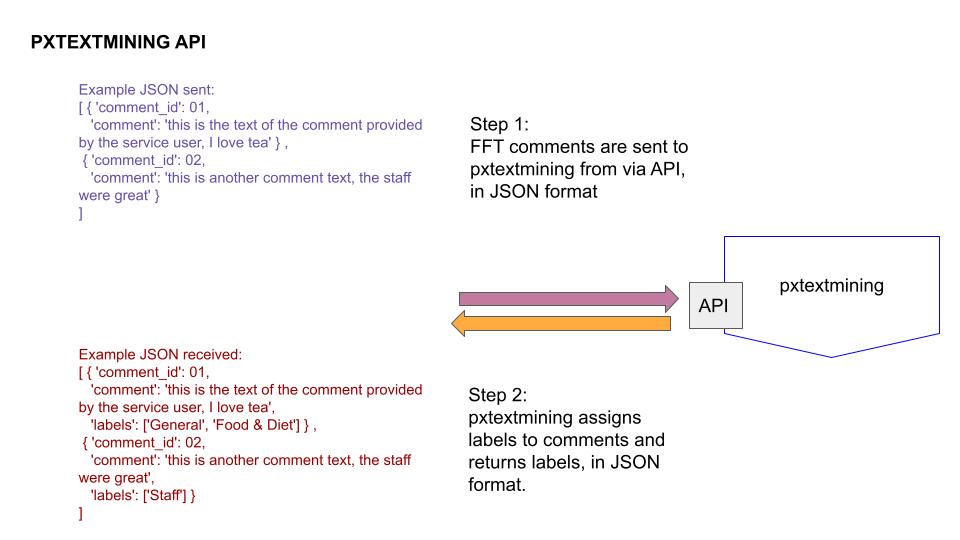

The API is built using the open source Python FastAPI package. Users of the API must format their data in JSON format, and submit this to the API endpoint via a POST request. This then gets cleaned and passed through the machine learning model, and the outputted labels are returned, also in JSON format. We utilised the Uvicorn library to test the API locally, and have ensured that the API is covered by unit tests. We are hosting the API on the Strategy Unit’s Rstudio Connect server. Deploying it was very simple, luckily! The diagram below shows how the API works.

What’s next?

The API currently uses a support vector classifier model which is quite small and fast. Even so, we have to balance the load on the server with waiting times, limiting the number of requests to 1000 at a time to prevent timeouts. We also have a transformer-based model which is very large and slow, taking several minutes to make predictions from text, which we have not implemented an API for. Our next step is to figure out how to do this without completely taking over the server’s resources, and impacting too much on the user experience.

We also want to make sure that participating trusts are able to make use of the API, and help demystify it. Communication is key, and blog posts like this will hopefully help. We are also providing example scripts showing how to create API queries in R and Python, and have built a simple website that shows the API and the model in action.

The model that is used in the API is also still a work in progress, we know we have a lot of work to do to improve performance. But now that the technical infrastructure is in place, it should be relatively easy to update with new models, when we train them. If you’re interested in using the API, do get in touch!